2020: Vortex Retrospective

As another year concludes, it’s time to recap this strange, strange year and take a look at what work made it into the project. In 2020, two major pillars of rendering tech came together to help produce more realistic graphics. We also built a few new tools and techniques to help keep tabs on performance, and invested in Editor polish. Let’s take a look!

Work can be broken down into: Rendering tech, Editor usability, Performance, Testing, and Documentation.

- New Rendering Tech: Realtime Reflection Probes with Box Projection, and Realtime Point Light Shadows.

- Improved Editor Usability: Undo/Redo functionality, duplicate entity functionality, lots of UI bugfixing.

- Performance: GL Timer Queries, Frustum Culling, Discard Framebuffer and Clear Load Actions for Render Passes.

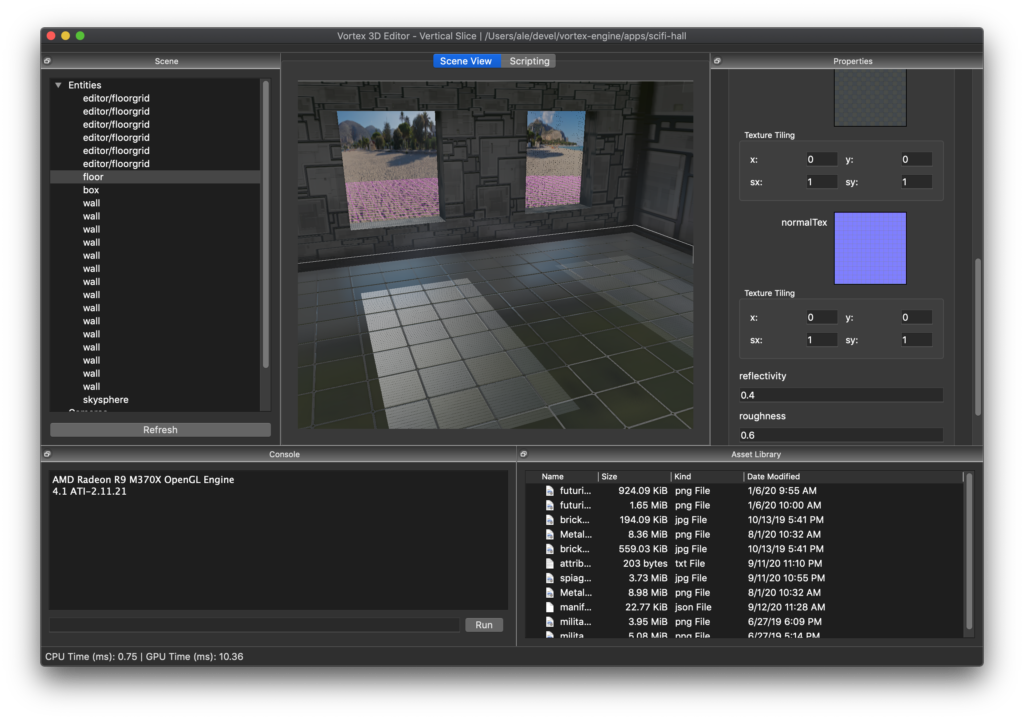

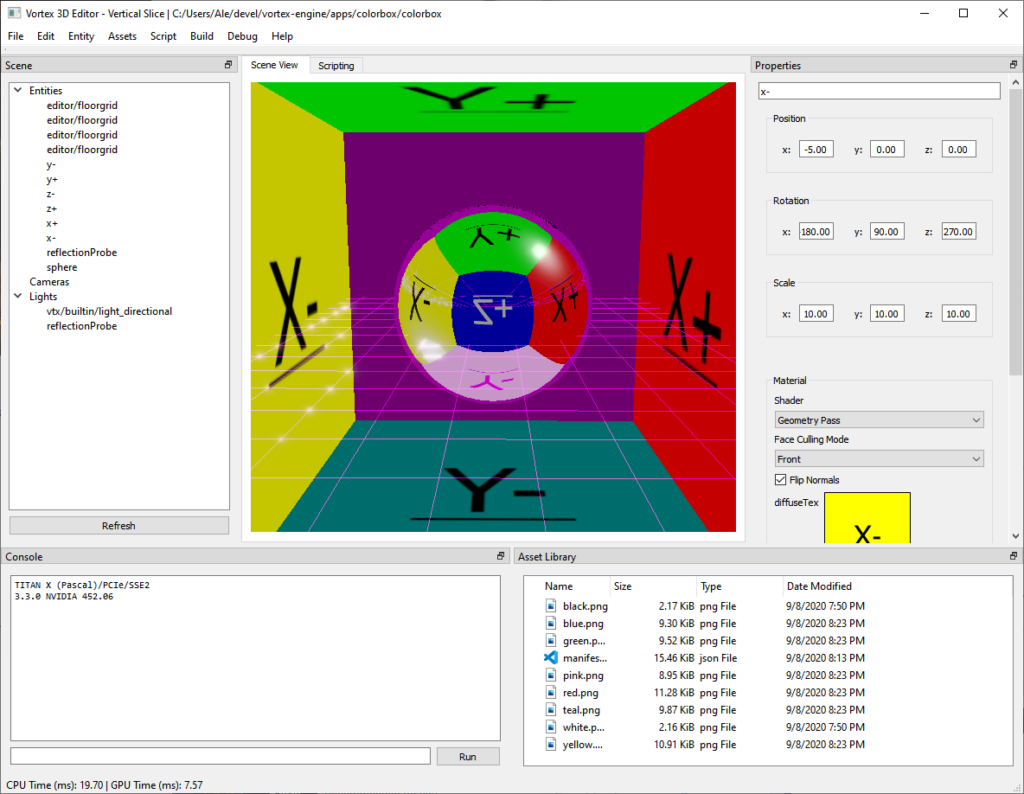

- New Test Playgrounds: SciFi Hall and Color Box (for testing reflection probes), and Corner Ball scene (for testing Directional and Point Light Shadows).

- New Scripting API Documentation: Lua programmer’s guide.

Over the next few sections, I’m going to break down some of the more interesting areas, present lessons learned, and possible areas for improvement.

Realtime Reflection Probes with Box Projection

The largest feature was without a doubt the implementation of realtime reflection probes. This work required changes to all the layers of the project, from the lowest levels of the renderer and core shaders, to the Editor UI and serialization.

In line with the Entity-Component architecture of Vortex V3, the reflection probes were implemented as a component. It can be attached to any entity, potentially allowing a realtime probe to track an entity’s movement.

Realtime vs One-Shot Captures

Each capture requires rendering the scene 6 times, once for each face (we don’t use layered rendering). Because this requires a significant amount of CPU and GPU work, it can easily grind performance to a halt. Some tradeoffs can be made, such as deciding not to include select visual detail into the rendered probes, as well as keeping their render target sizes in check.

Overall, however, Realtime Reflection Probes are a very expensive feature. For scenes consisting of mostly static objects, where dynamic object reflections can be avoided, it is possible (and recommended) to set the probes to just capture the environment once.

Filtering

To provide different roughness levels for reflections, we leverage the mipchain of the cubemap to store filtered, downsampled images of the captured environment. The mip level can then be biased from a shader, based on the roughness of the material.

Good filtering is a whole topic unto itself, and can get compute intensive. In the interest of simplicity, I opted for a simple mipchain generation. This is not ideal by any means, but my reasoning is that we can always improve this filtering once the system is in place and we start implementing PBR rendering.

Box Projection

To improve the realism of reflections, I implemented Box Projection, a “trick” that adjusts the vector used for sampling the cubemap. Normally, sampling from a cubemap will look good for an infinitely far away reflection. For reflections of objects close to the camera, this adjustment helps correctly line up reflections with the objects they reflect, improving quality.

The adjustment is done by performing a ray-box intersection of the sampling vector with a user-defined AABB around the reflection probe. Think of the AABB as the section of space covered by the reflection probe.

The ARM documentation has a great article online explaining the math behind the adjustment.

OpenGL Cubemap Gotcha

One of the biggest challenges I hit implementing the feature was the way OpenGL handles the coordinate system for cubemaps. OpenGL Cubemaps take the Renderman convention, where (0,0) is the point at the top left corner of a face. This is a departure from OpenGL’s own texture convention where (0,0) is the bottom left corner.

Failure to account for this will make sampling from the shader produce visually incorrect results, as it can be seen in the image below.

The dirty fix for this inconsistency is to render the scene “upside down”.

Adding the ability to render upside down was tricky, however, and required extensive testing and lots of frame debugging, as the renderer had to gain the ability to “roll” the camera over the Oz axis. This was not accounted for in the initial implementation of camera transforms.

Everything Else

Reflection probes also involved updates to the Editor UI, to enable taking a capture and configuring the Box Projection parameters, as well as to the serialization layer, to allow playgrounds to load the probes.

Point Light Shadows

Compared to Reflection Probes, Point Light Shadows proved easier to implement. They were a great stepping stone for ultimately developing reflection probes.

While I still need to tweak the shadow bias to reduce shadow acné, I am very happy with the results.

The biggest surprise implementing this feature is that it turned out easier to implement than directional shadow maps, and the results are astonishing.

Just like with reflection probes, this feature must be properly budgeted to prevent excessive CPU and GPU subscription stemming from rendering too many point light shadows. When enabling realtime shadows on a point light, a good rule of thumb is to make sure it will ultimately provide a significant contribution to the frame.

In Vortex, there is a constant that limits the maximum number of realtime point light shadows to 3 per frame. This can be tweaked, of course.

Frustum Culling

The last major engine feature I want to mention is Frustum Culling. This was the first time I rolled out an implementation from scratch.

Deducing the clipping planes of the frustum can be done with basic linear algebra techniques, so I leveraged the well-tested Math library in Vortex to implement AABB-Frustum intersection testing.

The largest challenge with Frustum Culling consists in making sure that the planes are pointing into the frustum, so the signed distance for the corners of the bounding boxes is always positive for all planes when the point lays inside the Frustum. A good way to verify this was by placing a test point inside the frustum and utilizing a debugger to evaluate the signed distance to each plane.

As with everything, the technique can be improved in several ways to extract more performance out of it. Some ideas include leveraging multithreading to cull the instance list in parallel, optimizing the math library further, making the data structures more cache friendly, or even leveraging compute shaders to cull via the GPU (and eventually using Multidraw Indirect).

One notable moment during the implementation was making a small change to vtx::Plane to add some functionality. Looking back at the git log, the relevant files were originally created in 2012. 8 years later, this class fulfilled its functionality to enable Frustum Culling!

Editor Improvements

Using the Editor throughout the year, I realized that at times I was unnecessarily struggling with the UI. Technical debt, either in the form of bugs or just hindrance, was present. It was time to address it.

Undo/Redo and Duplicate Entity

The largest additions towards usability were Undo/Redo functionality and the “Duplicate Entity” functionality. Just these two additions proved key to vastly improving the experience and making it actually fun to build elaborate playgrounds within the editor.

Adding Undo/Redo turned out to be a larger task than I anticipated, and in hindsight, if I were to implement an editor ever again from scratch, I would make sure to add support for these right from the start.

Dogfooding

A great workflow I found for identifying pain points and bugs was to keep a notebook to write down all problems encountered during normal editor use.

It’s even better if it can be an Issue Tracker, so all problems are immediately logged and classified. Once recorded, we can pick issues to work on directly from the list, knowing it will add immediate value to the user.

As we reach completion of Vortex V3, I expect polish, performance, and bug fixing to become the large majority of the issues tracked.

This, of course, leads to…

Wrapping Up Vortex V3

I set out to build Vortex Engine 3 (codename “V3”) almost 5 years ago, in April 2016 with the goal of making my graphics library easier to use.

With most features in place, and with this year’s additions of Box Projected Reflection Probes and Realtime Point Light Shadows, only one major feature remains: PBR rendering.

Once this final milestone is reached, as stated before, I expect most work remaining to become polish, performance, and bug fixing.

As with any personal project, there is always temptation to rewrite large parts of the project. As I continue learning and growing as an Engineer, there are definitely several areas where I would have taken a different approach.

I think it is important to follow the original project scope and resist falling into infinite scope creep. Complete V3 with PBR support and improved usability, performance, scripting and documentation, and avoid the complete rewrite of working systems.

Once the goals for V3 are met, I am content with calling it done, and subsequently setting lofty goals for an eventual Vortex V4, some time in the future :)

Looking Ahead

So, as Vortex V3 approaches feature complete, without committing to a Vortex V4 project, what areas for improvement would I consider for a next major version of the engine?

There are two main areas, and both are directed at extracting more performance from the underlying platform: Data-Oriented Design and Modern Rendering APIs. Allow me to explain.

Data-Oriented Design

When I started implementing the Entity Component System architecture for Vortex V3, about 5 years ago, I started with a very Object-Oriented design, where an Entity holds an array of instances that extend a base Component class.

This is a similar approach to what you would find on modern mainstream engines, however, anyone familiar with Data-Oriented design could immediately state the shortcomings of this approach. With this architecture, traversing entities and components is a tedious pointer-chasing task, jumping between different memory locations, and incurring in expensive Data Cache misses.

Additionally, the inheritance model for implementing component updates makes it hard to predict what logic will execute and will incur in Instruction Cache misses as well. There are many places across the engine’s codebase where a Data-Oriented Design would help improve performance.

Modern Rendering

The second main area has to do with modern rendering APIs. This last decade there has a been a tectonic shift in the rendering world, where new graphics and compute APIs have appeared that provide a more suitable abstraction over the 3D acceleration hardware.

I briefly mentioned Metal and Vulkan during the initial survey of APIs for Vortex V3, and at the time, I opted to use OpenGL 3.3 / ES 3.0 as the foundational tech. The reasoning at the time is that it would allow reaching the most devices from a single codebase.

This is still true today, however, newer graphics APIs offer mechanisms that help attain more deterministic performance, reduced CPU work, higher control over use of memory, better CPU parallelism. Modern APIs also enable more elaborate rendering techniques via the ubiquity of compute shaders.

OpenGL continues to be an extremely important API that enables a vast array of techniques and it’s simple to learn and use. It is clear, however, that low level explicit APIs are the direction the industry has taken.

The way I would approach this in a V4 renderer would be to continue developing the RenderAPI abstraction introduced in V3, but extend it to add modern concepts such as pipeline objects, texture views, compute shaders, command buffers, and hardware queues (graphics, compute, copy, present). The renderer could then be written against this API that underneath maps to Vulkan or Metal, depending on the platform the runtime is running on.

Conclusion

What an incredible journey Vortex V3 has been. I set out to revamp the 3D library I started working on back in 2010 and was able to expand it, completely rebuilding its renderer, implementing advanced rendering techniques and effects. I also built a visual Editor for Mac, Windows, and Linux, provided a Lua-powered scripting interface, and runtimes for iOS and Android.

I now have an Editor and Engine I can toy with to prototype simple games and simulations that run almost anywhere. Through this project, I’ve learned and grown. It wasn’t always easy, but seeing the results was always the best motivator to keep going. It’s now time to put in the final touches. Thank you for joining us through this year and, as usual, stay tuned for more!